The Whole Brain Architecture Initiative (WBAI) believes that the shortest path towards the development of artificial general intelligence (AGI) is the whole-brain architecture approach to learn from the entire brain. To promote the development of whole-brain architecture, we have held four hackathons. This year, we are planning the 5th WBA Hackathon with the theme of working memory. Since working memory is necessary for ‘fluid’ cognitive functions such as planning, its realization is one of the important steps toward AGI.

Prior to the hackathon, we hold a modelathon to collect computational cognitive neuroscientific models of working memory; we solicit for models of the brain mechanism to solve working memory tasks to be worked on in the next hackathon (see below). Models that are evaluated highly by an academic review will be awarded and grants up to 50,000 yen will be offered for outstanding models.

Participants can use their models for the Hackathon and/or academic writing. The proposed models will be referred to by Hackathon participants or people who are interested in this field (under a Creative Commons license).

The modelathon is organized in collaboration with Project AGI.

Summary

- The registration should be team-basis (consisting of one or more members).

- Please prepare a GitHub page to put your model.

- Deadline: September 30th, 2020

- Apply from this form.

- Models

- Submit only one model for each application.

- The model submitted shall include no software code.

- Place the model description (including English text up to 2,000 words and architectural diagram) and in PDF or MarkDown format and in the BIF format (below) under a Creative Commons license on the GitHub page specified in the application form by the deadline.

- The model will be evaluated according to the GPS criteria, consisting of generality, biological plausibility and simplicity.

Make statements in the model description with regard to the following:- A higher rating will be given to the more general model of working memory, which not only solves the hackathon tasks but also other tasks.

- A model based on the biological findings will be appreciated.

- All other things being equal, a concise model will be given a higher rating.

- Applications will be also evaluated with their feasibility for implementation, novelty (not being plagiarized), and intelligibility.

- The result will be announced around the end of October, 2020.

⇒ The Significant Contribution Award for the Working Memory Modelathon - Q&A: we invite applicants to a Slack workspace to be prepared for the modelathon/hackathon.

- If you have any questions before application, please use the contact form.

Hackathon task overview

- (Delayed) Match-to-sample tasks will be used.

A match-to-sample task is a task to judge whether figures presented are the same.

Operations such as rotation and expansion may be applied to the figures to be compared (invariant object identification). In the delayed match-to-sample task, the judgment must be done after the reference figure disappears from the screen. - Given a new task set, the agent is to learn which features should be attended from a few example answers presented before working on the task (few-shot imitative rule learning).

- Agents are allowed to pre-learn (e.g., learning that selecting a button leads to reward).

- The agent is assumed to have central and peripheral vision (like human beings). That is, only the central field of vision has high resolution. In order to recognize a figure precisely, the agent has to capture the figure in the central field. Note that comparing figures this way requires working memory.

Please see below for more information on tasks.

Reference architecture

While the mechanism of working memory has not been completely elucidated, the following might be said:

- Since working memory is involved in perceptual representation, sensory areas (neocortices other than the frontal area) are involved.

- The content of working memory (perceptual content of the past) and the present perceptual content must be distinguished within the cortex.

- Since the working memory is involved in the executive function by definition, the frontal lobe, which is supposed to be involved in the executive function, is involved.

- Thus, working memory involves a network between the frontal lobe and the sensory cortices.

- The executive function (frontal lobe) controls the retention and termination of working memory by interacting with the perceptual areas.

We are planning to provide the following reference architecture for the 5th WBA Hackathon, which modelathon participants are encouraged but not obliged to use.

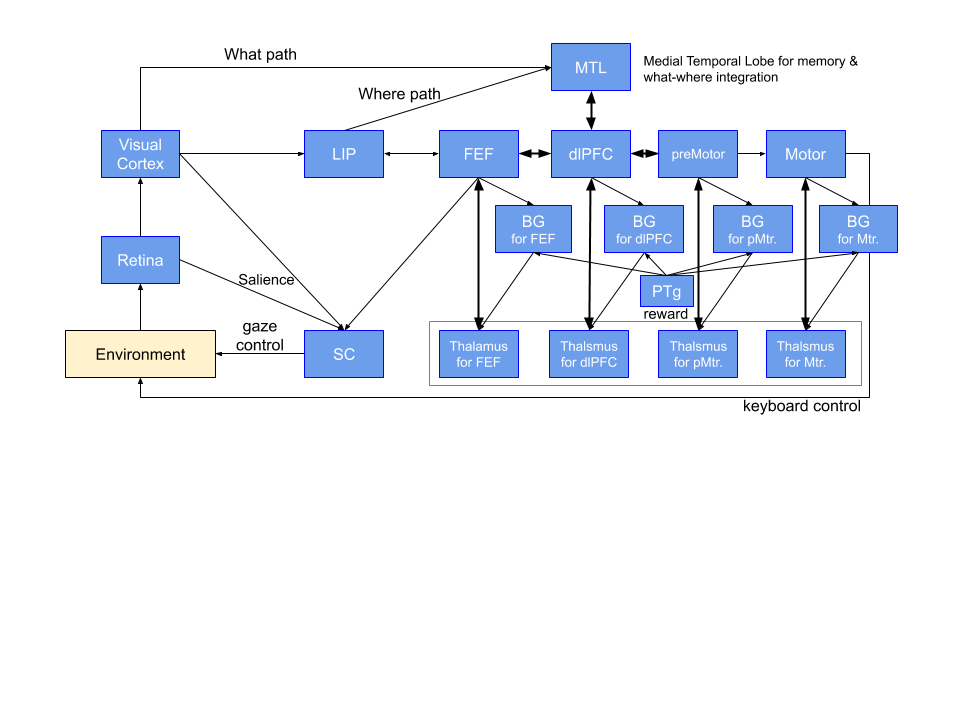

Fig. 1: whole brain reference architecture for the 5th WBA hackathon

SC:superior colliculus, LIP: lateral parietal, FEF: frontal eye field, BG: basal ganglia,

PTg: pedunculopontine nucleus

References

- For a survey on working memory:

Shintaro Funahashi: “Working Memory in the Prefrontal Cortex,” Brain Science, 7(5):49.

doi:10.3390/brainsci7050049 (2017) - For general introduction to computational cognitive neuroscience:

CCNBook: https://github.com/CompCogNeuro/ed4 - For introduction to gaze control:

Okihide Hikosaka, et al.: “Role of the Basal Ganglia in the Control of Purposive Saccadic Eye Movements,” Physiol Rev, 80(3):953-78. doi:10.1152/physrev.2000.80.3.953 (2000)

Brain Information Flow Diagram (BIF)

WBAI proposes the Brain Information Flow Diagram (BIF) as a format for describing and sharing whole brain architecture. Modelathon participants are requested to submit the model in BIF (spreadsheet format). The BIF of the reference architecture is found here.

Hackathon task details

Note that while the specification is being fixed for the modellingathon, it is subject to change by the announcement of the hackathon.

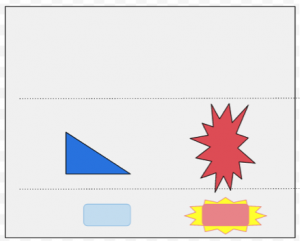

Test Screen

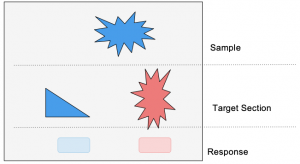

The screen to be presented to the agent consists of three sections (Figure 2).

Main tests

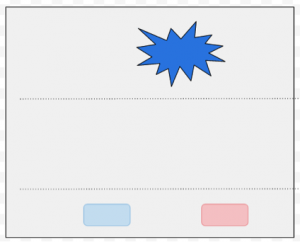

In the delayed sample matching task, the sample figure is presented without target figures at the beginning.

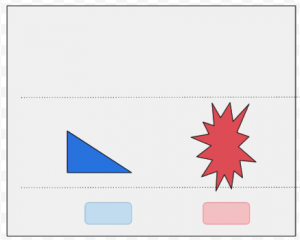

Then the sample figure is occluded, and the target figure is displayed after a period of time (up to several seconds). While the target figure is being displayed, the agent chooses a button in the response section. (Fig.4)

After a certain amount of time (up to a few seconds), the button corresponding to the correct target will flash (Fig. 5). At this point, the targets are still visible.

If the agent has responded correctly, it is rewarded. The correct answer is to choose the button below the ‘same’ figure as the sample figure (in the example above, the shape of the correct figure is the same as the sample, while the color and orientation differ).

Note that an option for ‘no same figure’ may be added in the hackathon.

For a non-delayed sample matching task, the sample figure is presented during the selection of a target, where WM is still required as the agent has to retain information during gaze shifting.

Exemplar sessions (few-shot imitative rule learning)

The agent is shown one or more trials before main tests to learn which attribute (color or shape) to use for matching between the sample and target.

In a trial, the correct button in the response section flashes.

After trials, the entire screen flashes twice to enter the main test.

Pre-training

Participants can train their agents by designing pre-training sessions such as follows.

- Only one shape is displayed in the target section and selecting the button below leads to a reward and button flashing.

- Non-delayed sample matching task with no exemplar session:

The reward is given upon choosing the figure in the target section unambiguously most similar to the sample.

Japanese

Japanese