CCNBook Motor in a Nutshell

The aim of this article is to present an actor-critic model based on the CCNBook. As the description of reinforcement learning in the Motor chapter seems a bit ‘roundabout’, this memo tries to simplify it.

The chapter is based on the hypothesis that the basal ganglia (BG) uses the actor-critic type of reinforcement learning, which in turn is based on the finding that the dopamine output from SNc encodes TD (time difference) δ used in AC learning.

|

| Figure 7.6: Basic structure of the actor critic architecture (from CCNBook) |

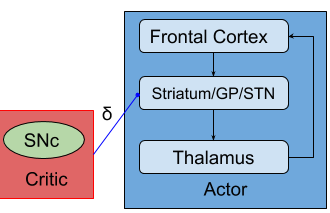

Since SNc provides with δ, it is supposed to be part of the Critic, and with the Figures 7.2 & 7.4 of the chapter, the Actor contains the loop of Frontal Cortex, Striatum, and Thalamus with the dopamine (δ) input to the Striatum (Figure 1).

Here, the Actor determines its action based on the state representation (of the environment) in the Frontal Cortex, which is in turn formed with its input (not shown) from other cortical areas, the amygdala, the hippocampus, and other subcortical nuclei (the input sources vary with the area in FC). While the Frontal Cortex provides with output options, the Striatum selects an option to be outputted. The reward r to the Critic is explained the PVLV (Primary Value, Learned Value) model section of the chapter (Figure 7.8).

|

| Figure 7.8: Biological mapping of the PVLV algorithm (from CCNBook) |

| VS: Ventral Striatum, VTA: Ventral Tegmental Area, PPT: Pedunculopontine Tegmental Nucleus, LHA: Lateral Hypothalamic Nucleus, CNA: Central Nucleus of the Amygdala, CS: Conditioned Stimuli, US: Unconditioned Stimuli (〜Reward) |

An apparent problem of Figure 7.8 is that SNc does not receive a reward signal (US). The problem is solved in Figure PV.1 in the PVLV page, where SNc is substituted with VTA.

|

| Figure PV.1 (from CCNBook) |

| LHB: Lateral Habenula, RMTg: Rostral Medial Tegmental gyrus |

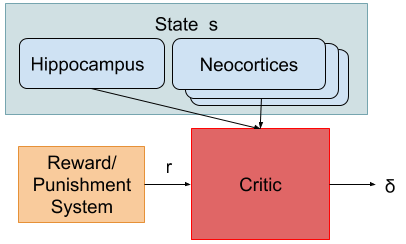

If you want distinguish all the parts shown in Figure PV.1, you should keep them in your model. However, if the function of the circuit is the Critic in AC learning, the complication would not be necessary in engineering terms. Figure 2 shows a model in which the complication is encapsulated (parts such as the amygdala, VTA/SNc, part of the striatum are hidden). Note that the TD error δ encodes rt+1 +γV(st+1)−V(st), where r stands for reward, V(s) the evaluation of the state s, and γ the discount coefficient.

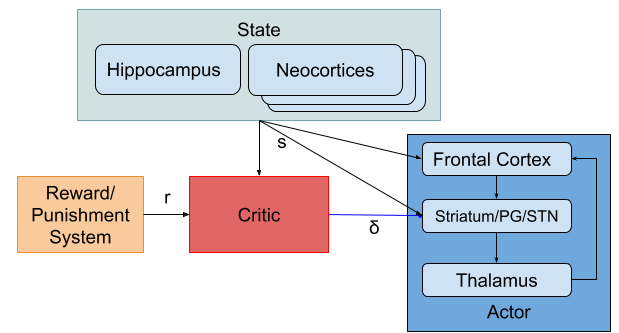

You might want to distinguish the reward system from the punishment system, but its physiology may be in the dark. A simple overall (AC) scheme would be modeled as below (Figure 3). Note that the Frontal Cortex is also included in the State box.

Reference

Daphna Joela, Yael Niva, Eytan Ruppin: Actor–critic models of the basal ganglia, Neural Networks 15 (2002).