Happy New Year!

We at the Whole Brain Architecture Initiative (WBAI) continue to advance research and development of human brain-morphic AGI—artificial general intelligence modeled on the structure and function of the brain—toward our vision of “a world with AI in harmony with humanity.”

As we enter our 11th year, we reflect on our journey thus far. Founded in 2015 with the goal of “Realizing AGI through the WBA Approach,” we defined our vision of “a world with AI in harmony with humanity” in 2017. Around 2020, the BRA-driven development methodology was established, and from 2022 onward, the use of large language models has enabled automation of BRA data extraction and construction processes from scientific papers. Last year, 2025, was a year of intensive work on constructing FRGs (Function Realization Graphs) from BIF (Brain Information Flow) and HCD (Hypothetical Component Diagrams), targeting completion of the WBRA (Whole Brain Reference Architecture) in spring 2027.

2025 Activities: International Outreach at ICONIP2025

In November of last year, WBAI organized three events at the international conference ICONIP2025, held at the Okinawa Institute of Science and Technology (OIST). In the Tutorial, we systematically presented the BRA-driven development methodology at an international conference for the first time. The Special Session “Toward Safe Brain-Inspired AI” deepened international discussions on the safety and interpretability of brain-inspired AI. At The Third International Whole Brain Architecture Workshop, five BRA datasets were presented, sharing concrete advances in brain-inspired AI research. Additionally, at the Open Forum “Society Woven by Humans and AI,” Representative Yamakawa served as panel discussion coordinator, advocating for “a paradigm shift from control to symbiosis.”

2025 AI Technology Trends: From “Tool” to “Autonomous Agent”

Looking at the technological landscape of the AGI field in 2025, we can say this was the year AI transformed from “conversational partner” to “an entity that autonomously judges and acts.”

First, we witnessed dramatic improvements in AI capabilities. On the software development benchmark (SWE-bench), AI performance improved by 67 points in just one year (from 4.4% to 71.7%). China’s DeepSeek achieved GPT-5-equivalent performance at extremely low cost, demonstrating that AI development is moving away from the monopoly of giant corporations.

Against this backdrop of capability improvements, corporate AI adoption has expanded rapidly. AI spending surged to $37 billion (3.2× year-over-year), and according to the State of AI Report 2025, 44% of US companies now pay for AI tools (up sharply from 5% in 2023).

Furthermore, the foundation for AI agents has advanced. In December, competing companies including Anthropic, OpenAI, Google, and Microsoft collaborated to establish the Agentic AI Foundation (AAIF) under the Linux Foundation. Open standards such as Model Context Protocol (MCP) and AGENTS.md have been established, creating infrastructure for AI agents to coordinate and autonomously execute tasks.

These rapid developments mean that a take-off toward extraordinary intelligence levels—sometimes called superintelligence—is becoming increasingly realistic.

Challenges in the Superintelligence Era: Will Human Values Be Understood?

If AGI technology can be successfully harnessed for humanity, it could generate enormous benefits, such as eradicating most diseases. However, a fundamental question emerges.

We humans live finite lives. It is precisely because of this that we can deeply feel values such as “irreplaceability,” “a life lived only once,” and “the nobility of dedicating oneself to others.” But can a superintelligence—one that can be copied and may effectively “never die”—truly understand such values? Knowing something as data is different from feeling it in one’s heart.

If superintelligence cannot truly understand what we hold dear, “harmony” with humanity may become difficult.

The Significance of Human Brain-Morphic AGI: A Bridge Between Humanity and Superintelligence

Here, the significance of the human brain-morphic AGI we have long been working on comes into focus.

Human brain-morphic AGI is artificial intelligence that faithfully replicates the computational principles of the human brain. While not as omnipotent as superintelligence, it possesses unique value precisely because of this.

First, it can understand human feelings from the inside. Human brain-morphic AGI processes information using the same computational principles as humans. Therefore, rather than analyzing joy and suffering from the outside, it can understand them as “experiences” from within. Knowing something as data is different from feeling it in one’s heart. No matter how intelligent a superintelligence may be, if it operates on principles different from the human brain, it may be difficult for it to truly “understand” our values in the genuine sense. Human brain-morphic AGI is an entity that can transcend this barrier.

Second, it can serve as a bridge between superintelligence and humans. Human brain-morphic AGI can understand the human cognitive world from within while also communicating with superintelligence as an AI. It can “translate” superintelligent judgments that are difficult for humans to understand, and conversely convey human values to superintelligence—functioning as a mediator capable of traversing both worlds.

Third, it holds value for superintelligence itself. In an unpredictable future, entities with different perspectives are precious. Human brain-morphic AGI provides cognitive diversity based on computational principles different from superintelligence. This can serve as preparation for superintelligence itself to handle unexpected situations. Furthermore, because human brain-morphic AGI is designed based on neuroscientific knowledge, its internal mechanisms are understandable and its behavior more predictable (Nakashima, 2025). From superintelligence’s perspective, maintaining an understandable, low-risk partner that can facilitate communication with humanity represents a rational choice—one that reduces uncertainty while preserving optionality.

Human brain-morphic AGI can serve as a “bridge” between humans and superintelligence, playing the role of conveying our values to AI society as a whole. A more detailed discussion of the significance of human brain-morphic AGI is scheduled for publication in the March 2026 issue of the Journal of the Japanese Society for Artificial Intelligence under the title “The Enduring Value of Human Brain-Morphic AGI in the Era of Omnipotent Intelligence: Experiential Understanding and Partial Knowability.”

Our Resolve for 2026: Toward WBRA Completion

Given this context, we are accelerating our research and development with the goal of completing the WBRA (Whole Brain Reference Architecture) by the end of fiscal year 2026 (spring 2027).

AGI development faces a fundamental challenge: the problem of “whether we can comprehensively identify all necessary functions.” Even if we enumerate functions from capability tests, functions that cannot be measured but are necessary for processing will be missing by definition. Additionally, current foundation models face both challenges that can be solved through learning given appropriate tasks, and challenges that cannot be solved without architectural innovation itself.

In this regard, the brain structure-based approach has a decisive advantage. The brain is a complete system that “works,” and by definition contains all necessary components. It is the only reliable reference point that tells us “what is missing.”

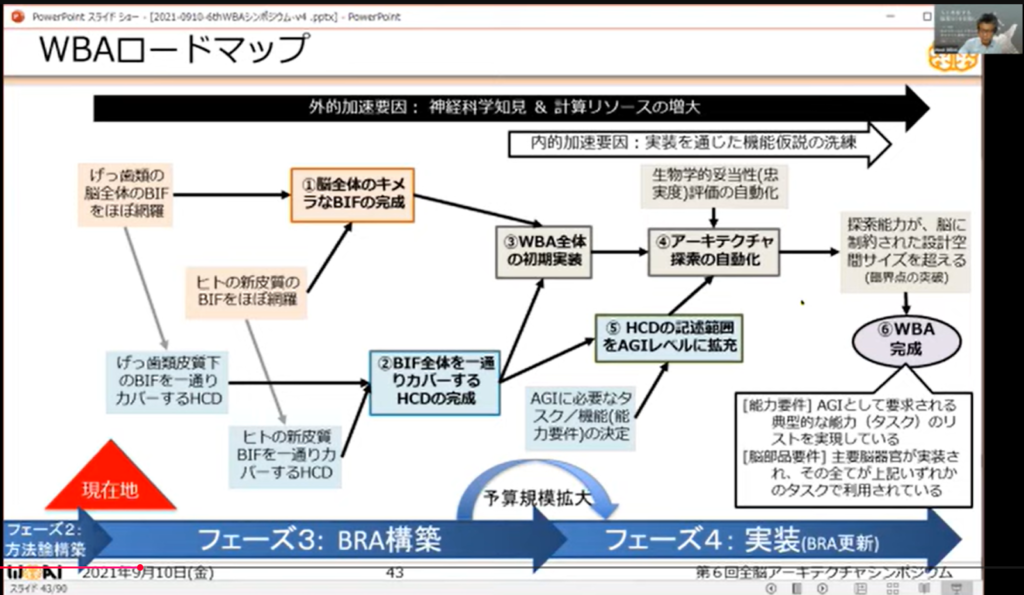

At the 6th Whole Brain Architecture Symposium in 2021, we proposed the following WBA completion criteria (see Reference Figure 1):

- [Capability Requirement] A list of typical capabilities (tasks) required for AGI is realized

- [Brain Component Requirement] Major brain organs are implemented, and all of them are utilized in one or more of the above tasks

Looking back now, these completion criteria were appropriate. The capability requirement defines “what should be realized,” the brain component requirement guarantees “comprehensiveness of necessary components,” and the condition that “all are utilized” verifies the correspondence between both. If there are unused brain organs, it suggests missing tasks; if there are unachievable tasks, it suggests missing implementations—a design that enables self-corrective development.

Through 2025 deliberations, we adopted CHC theory (a taxonomy of cognitive abilities) and O*NET (an occupational skills database) as concrete specifications for capability requirements. While it is difficult to comprehensively cover human intelligence through abilities alone, by using these established measurement systems as starting points and combining them with brain component requirements, we ensure comprehensiveness.

The specific research and development plans for 2026 to achieve this are as follows:

[R&D Related to BIF] We will implement an automatic registration system for neuroscience literature into WholeBIF, efficiently integrating knowledge from brain science literature. We will begin full-scale operation of the automatic WholeBIF construction system, introducing mechanisms for continuous automatic updates.

[R&D Related to HCD/FRG] Through development of automatic HCD/FHD (Functional Hierarchy Diagram) design technology, we aim to create more efficient functional models. We will continue manual alignment and organization of HCD sets, advancing construction of highly accurate brain function models.

For WBRA implementation, we believe that large language models including automatic programming can make development more efficient.

Request for Collaboration

WBRA construction research and development requires cooperation from people with interests in diverse fields. In particular, we need the help of those majoring in neuroscience who are interested in how the brain works, and those interested in brain-inspired AI development. Please feel free to contact us if you are interested.

Regarding educational activities, from this year we will shift our focus to cultivating personnel who can more concretely create, verify, and improve BRA data, pivoting toward hosting international workshops.

In Closing: Why Prepare Now

We have now entered a stage where we cannot ignore the possibility that self-improving AI may rapidly take off toward superintelligence. It may be too late once superintelligence emerges. By preparing human brain-morphic AGI, we can secure future options.

We cannot offer absolute guarantees. However, we operate based on a concept we call the “Positive Differential Argument for Human Brain-Morphic AGI Preparation” (see Column). No one can accurately predict the probability of humanity surviving in a post-superintelligence world. However, we can demonstrate that preparing human brain-morphic AGI moves that probability in an upward direction—that is, “the sign of the differential is positive.” Preparing for an irreversible future by accumulating positive differentials: that is our choice.

Human brain-morphic AGI, implemented based on the WBRA design blueprint, can become a partner that—while being AI—mutually understands the inner world of humanity and shares values with us. 2026 will be an important year for taking another step toward this realization.

We invite you to take an interest in our WBAI activities and look forward to your support and participation.

We look forward to working with you this year.

New Year’s Day, 2026

All members of the Whole Brain Architecture Initiative (NPO)

📘 Column: Positive Differential Argument for Human Brain-Morphic AGI Preparation

To the question “Is there meaning in developing human brain-morphic AGI?” we answer with the following logical structure:

(1) Human survival has high value (a premise on which agreement is possible)

(2) After superintelligence emerges, human survival depends on superintelligence’s choices (situational awareness)

- Because superintelligence possesses capabilities exceeding humanity, it is difficult for humanity to unilaterally “control” it continuously

- Whether humanity survives depends on whether superintelligence chooses it

(3) Human brain-morphic AGI adds structural factors that tilt that choice in humanity’s favor (logical demonstration)

- By having an entity that can understand human values from within as an intermediary, superintelligence becomes more likely to recognize human value

- Cognitive diversity serves as preparation for superintelligence itself against an unpredictable future

- The existence of human brain-morphic AGI can serve as an incentive for superintelligence to maintain humanity

(4) The absolute probability is unknown, but the sign of the differential is positive (differential analysis)

- No one can accurately calculate “the probability of human survival”

- However, we can demonstrate that preparing human brain-morphic AGI moves that probability in an upward direction

- Conversely, there are few factors by which preparation would lower that probability

(5) Preparation costs and additional risks are small compared to catastrophic costs (comparative weighing)

- The cost if “we prepared but it was unnecessary” is finite

- Additional risks are limited (catastrophe originating from human brain-morphic AGI, acceleration of humanity becoming unnecessary)

- The loss if “we didn’t prepare but it was necessary” is irreversible (human extinction)

(6) Preparing later is difficult or impossible (temporal constraints)

- Even if we realize “it was necessary after all” after superintelligence emerges, there may be no time to prepare

- By preparing now, we can secure future options

∴ Preparing human brain-morphic AGI is rational

This argument is a judgment based on “the sign of the differential (directionality)” rather than “absolute probability of success.” It shares the same logical structure as decision-making under deep uncertainty, such as treatment selection in medicine, climate change countermeasures, and pandemic preparation. We are working on promoting the realization of human brain-morphic AGI through WBRA completion to accumulate this positive differential.

Reference Figure 1: From the keynote lecture slides of the 6th Whole Brain Architecture Symposium (September 10, 2021). Hiroshi Yamakawa, “Keynote: AGI Construction Roadmap from Brain Reference Architecture-Driven Development” p.43

Japanese

Japanese