New year’s greetings from the Whole Brain Architecture Initiative (WBAI)!

WBAI continued its activities in 2018 with assistance from supporting members, supporters, SIG-WBA, advisors, and other parties.

Our major activities last year included: holding the 3rd WBA symposium, participating in the AI-EXPO Tokyo, organizing WBA seminars with themes of “inference,” “autonomy and generality,” “reinforcement learning in the brain,” and “abduction”, and arranging the 4th WBA hackathonwith the theme of “providing AI with gaze control.” In the Kakenhi project “brain information dynamics underlying multi-area interconnectivity and parallel processing,” we also advanced model development along the neocortical framework developed in 2017 [1]. In addition, we held a special symposium in the 28th convention of Japan Neural Network Society (JNNS 2018) (proceedings) and conducted academic presentation activities both in Japan and abroad (cf. WBAI Policies, FY2018).

AI researchers around the world are gradually advancing toward improving the generality of machine learning to respond to diverse tasks and environments in the fields of continuous learning, lifelong learning, and transfer learning. To enhance the generality of intelligence, it would be necessary to create a mechanism for problem-solving that accumulates knowledge in a granular form and reuses it by combining its pieces. Neural networks have sufficient flexibility to support the reusability required for such a mechanism.

The current artificial intelligence boom based on deep learning seems to have reached a technological maturity and we have seen a range of applications centered on recognition technology. There are increasing cases where deep learning is used as part of practical systems. The “autonomous tidying-up robot” by PFN is a good example in this regard. In deep learning, a mainstream method is to acquire background knowledge in unsupervised learning with a large amount of data on a task and to apply it to other tasks by fine-tuning. Last year, BERT (Google) showed that performance in natural language processing could be improved in this manner. In a specific domain where data is abundant and there is an appropriate deep neural network architecture, it is now possible to solve general problems to a certain extent by using end-to-end learning.

However, when knowledge is reused by being decomposed and coupled dynamically, the number of combinations will be significant, and it is not easy to raise generality beyond a specific domain. The combination of knowledge also needs to be done flexibly for temporal behavior. The brain overcomes these difficulties and realizes general intelligence.

We will see other technical barriers to complete AGI. The WBA approach is to limit the direction of development and obtain clues by developing AGI along with the architecture of the brain. In this approach, the architecture for developing software by learning from the brain and its development methodology are unique and major objects of research as well.

How did the unique approach form? The beginning was the proposal of basic ideas by Yuuji Ichisugi, Yutaka Matsuo, and Hiroshi Yamakawa at the end of 2013. Through discussion with initial members of WBAI, the “Central WBA Hypothesis” (below) was proposed by Koichi Takahashi in March 2015. Based on this hypothesis, WBAI was inaugurated with the mission‘to create a human-like artificial general intelligence (AGI) by learning from the architecture of the entire brain.’

The Central WBA Hypothesis

“The brain combines modules, each of which can be modeled with a machine learning algorithm, to attain its functionalities, so that combining machine learning modules in the way the brain does enables us to construct a generally intelligent machine with human-level or super-human cognitive capabilities.”

WBAI promoted the research of an integrated platform as the mechanism to manage computational resources for executing and learning of multiple modules after its beginning in 2015. However, the major challenge of how to learn from the brain and build software remained.

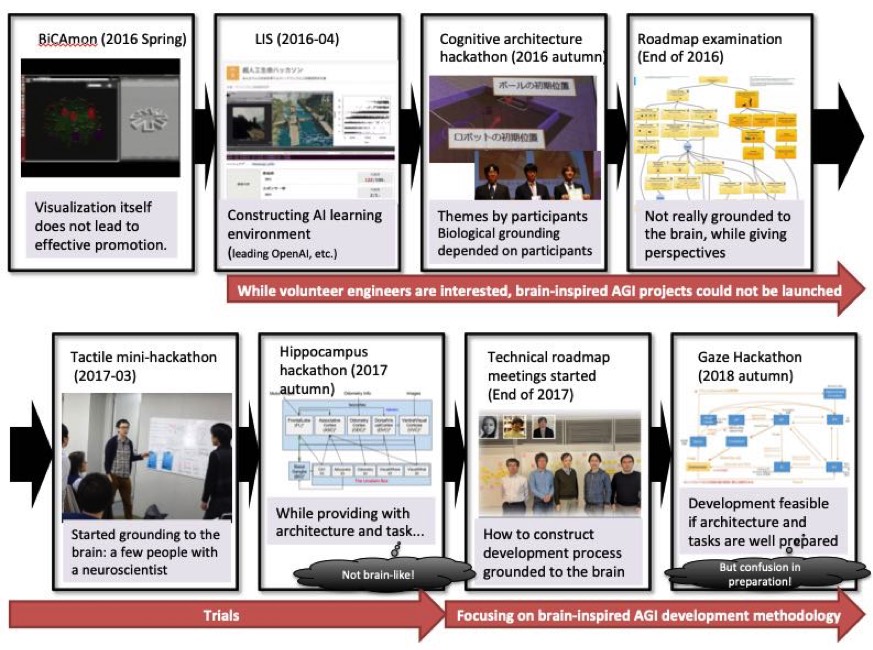

Thus, there was no way to explain what to make and how to do to engineers interested in brain-inspired AGI and development projects could thus not be launched. In 2017, to overcome this situation, we began to learn from the brain together with engineers. In 2018, progress in development methodology of brain-inspired AGI took place. With these activities, we have now reached a stage of developing part of a system that could be termed WBA. To develop brain-inspired AGI more efficiently, we are beginning to switch the development method from combining a machine learning module to superimposing models of larger functional networks (functional circuits) in the brain (Fig.1 below).

WBAI has been promoting R&D to realize AGI while learning from the architecture of the entire brain. Meanwhile, based on the prediction that AGI will bring about the most significant impact since the Industrial Revolution by automating innovation of science, technology, and the economy, we are to proceed with you, holding a long-term perspective “to create a world in which AI exists in harmony with humanity.” We hope for your continued and further support and patronage this year!

January 2019

Members of the Whole Brain Architecture Initiative

Footsteps for our AGI development method learning from the brain

In the following section, we would like to show our footsteps in the past for the methodology for learning from the brain to build AGI in more detail.

Fig. 1: How to create AGI by learning from the brain

In the spring of 2016, a tool to monitor the activity of cognitive architecture (BiCAmon) was developed, but such visualization alone cannot promote development.

Meanwhile, a simulation environment for AGI learning (Life in Silico: LIS) was developed by Dwango Artificial Intelligence Laboratory and released along with Dwango’s “super Artificial Life hackathon.” It was also used as a platform for the second WBA hackathon. Four outstanding results in the hackathon were presented at the event “Communal making of cognitive architecture” of the 30th Anniversary of the Japanese Society for AI (JSAI) and the result that was grounded to the brain was awarded as the most outstanding. In this way, we could have a certain outlook for the method of developing cognitive architecture. However, it became also clear that the development of brain-inspired AI should be led by individuals with expertise in both neuroscience and engineering.

At the end of 2016, there was a discussion of how experts in neuroscience and engineers could work together to build software by learning from the brain. The discussion, together with engineers of SIG-WBA, examined existing road maps for AGI including those by Good AI, Facebook, and AGI researchers. However, as they were confirmed to be not grounded to the brain, we left them as mere references.

In early 2017, engineers without significant knowledge of neuroscience held a “tactile mini-hackathon” (see below) to make a computational model of the brain. It revealed that if neuroscientists and a small number of engineers proceeded with intensive discussion, they could build a small-scale artificial neural network model of the brain, and we obtained a prospect for the method of development.

Following this success, we held the 3rd WBA hackathon with the theme of the hippocampus in the autumn of 2017. We provided participants with stepwise tasks and execution environments corresponding to animal experiments and a rough referential architecture. While the winning team realized a system to carry out multiple tasks, the implementation was not sufficiently close to the architecture of the brain and the importance of solving multiple tasks in a single system was not well communicated to participants.

Based on these experiences, the issue of “how can we develop brain-inspired AGI with certain scale and complexity by the collaboration of engineers?” has surfaced. At the end of 2017, discussion was developed regarding the relationship among the abilities that the completed AGI demonstrates, the partial functions that support them, and the relation between the functions and brain organs. Thus, we came to focus more on “development methodology” for creating brain-inspired AGI.

In the first half of 2018, the following advances on development methodology were made:

- Prepare related tasks so that generality can be evaluated

- Prepare brain-inspired architecture related to the tasks

- Develop sample programs with stub modules

- Prepare evaluation criteria for results (the GPS criteria)

Regarding the architecture, modules corresponding to the brain organs and their functions, the connection between the modules, and the computational semantics of the signals flowing there are being clarified. Development with stubs means scaffolding for development by preparing a combination of built-in modules that can solve specific tasks as sample programs. The GPS criteria consist of: 1) “functionally general” — to evaluate a system to execute multiple tasks to aim for general intelligence, 2) “biologically plausible” — to evaluate mechanisms similar to the brain to be WBA, 3) “computationally simple” — to evaluate implementation to be as simple as possible.

As a result of the development methodology, the 4th WBA hackathonin the autumn of 2018 reached the point of developing partial WBA. However, its preparation was confused and involved a great deal of work. In retrospect, significant confusion lied in there being no division of labor on setting tasks, designing architecture, and implementing samples. The development method to implement and combine modules corresponding to brain organs along the brain architecture naïvely followed the “Central WBA Hypothesis.” However, that approach alone did not guarantee efficient preparations.

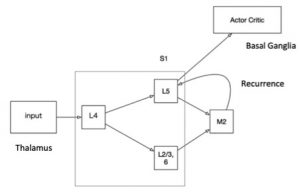

With this background, from the middle of 2018, we reviewed the development methodology to assign required expertise by the division of labor, such as architects designing functional circuits as software specifications and engineers implementing them [1]. Here, the functional circuit is a partial graph of the architecture with its functional semantics. Thus, we will gradually build WBA by superimposing functional circuits as connected brain organ modules.

For an engineer to write a program, it is sufficient to understand the information processing aspect of functional circuits. If one is motivated to develop brain-inspired AI with expertise in machine learning, one can implement a program that actually runs based on the specification created by an architect.

Meanwhile, architects design functional circuits corresponding to certain tasks with existing architecture and knowledge in neuroscience. It is natural to think that a function is exhibited in a circuit of modules in the brain, an approach which makes it easier to examine the function rather than dealing with single modules. For this reason, while architects need to have both a certain understanding of neuroscience and a sense in information science, their ability to implement programs is not questioned.

In 2019, we will further advance the brain-inspired AGI development method to superimpose functional circuits and would like to promote R&D so that we can efficiently build brain-inspired AGI as a development community. Specifically, we will update the architecture from implemented functional circuits, design new functional circuits by combining architecture and neuroscience knowledge, and develop technology to support the design with neural activity data.

As the methodology and development progress, duplication will occur in the implemented modules consisting of functional circuits. Thus, from 2020 onwards, it will be a challenge to appropriately merge modules (by, e.g., refactoring) into integrated general intelligence.

References:

- [1] Kosuke Miyoshi, Naoya Arakawa, Hiroshi Yamakawa, Do top-down predictions of time series lead to sparse disentanglement? (poster) (JNNS 2018, S2-4, pp.15–16, 2018)

- [2] Masahiko Osawa, Kotaro Mizuta, Hiroshi Yamakawa, Yasunori Hayashi and Michita Imai, Development of Biologically Inspired Artificial General Intelligence Navigated by Circuits Associated with Tasks, (JNNS 2018, S2-3, pp.13–14, 2018. (proceedings)

[Column] Tactile mini-hackathon started learning from the brain (March 18-20, 2017)

The mini-hackathon aimed to realize an artificial neural network model which performs tactile information processing imitating the partial architecture (somatosensory perception and motion) of the neocortex. It was confirmed that if neuroscientists could closely support a small number of excellent engineers, an artificial neural network model for a small sub-region of the brain could be constructed.

- Based on the interpretation by a neuroscientist of a thesis (Manita et al. 2015), machine learning engineers implemented an artificial neural network.

- A machine learner capable of tactile information processing was created by mimicking the connective architecture of the brain region related to somatosensory perception and motion.

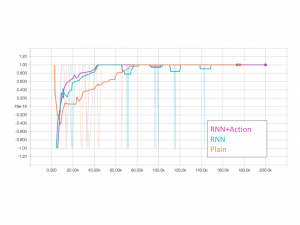

- Evaluation of the performance of the machine learner revealed that a convolution and recursive network were effective for distinguishing different types of tactile stimuli.

Fig. 2 Discussion between engineers and neuroscientists

Fig. 3 ANN model of partial connectome Fig. 4 Evaluation of tactile classification with ANN

Fig. 4 Evaluation of tactile classification with ANN

Japanese

Japanese