The Whole Brain Architecture Initiative (WBAI) believes that the shortest path towards the development of artificial general intelligence (AGI) is the whole-brain architecture approach to learn from the entire brain. To promote the development of whole-brain architecture, we have held four hackathons. This year, we hold the 5th WBA Hackathon with the theme of working memory. While working memory is necessary for ‘fluid’ cognitive functions such as planning, there are few computational neuroscientific or artificial neural network (ANN) models. Thus, its realization with brain-inspired ANNs will be a fundamental challenge toward AGI.

Time Period

From May to October, 2021

Task Overview

- Match-to-Sample Tasks will be used: a match-to-sample task is a task to judge whether figures presented are the same. In the delayed match-to-sample task, the judgment must be done after the sample figure disappears from the screen so that working memory is required.

- Operations such as rotation and expansion may be applied to the figures to be compared (invariant object identification).

- Given a new task set, the agent is to learn which features should be attended from example sessions presented before working on the task (few-shot imitative rule learning).

- Agents are allowed to play the game to learn with rewards (RL) with many trials. The agent is also allowed to pre-train brain organs, on the environment, i.e., before even being given the task set.

- The agent is assumed to have active vision consisting of central and peripheral visions (like human beings). Only the central field of vision (fovea) has high resolution. In order to recognize a figure precisely, the agent has to capture the figure in the fovea by moving the gaze. Note that comparing figures this way requires working memory.

Please see below for more information on the task.

Sample architecture

Sample cognitive architecture code is given, which consists of a visual system and a reinforcement learner to model basal ganglia, and is able to solve a non-delayed match-to-sample task without active vision by being trained in the task environment.

The sample architecture provides empty modules (stubs) that correspond to brain organs. Participants are to implement working memory by filling the stubs. In case you alter the architecture, you have to provide thorough documentation.

The details of the sample architecture can be found here.

Registration

Registration should be team-based (consisting of one or more members). Please create a team account on CodaLab and a team repository on GitHub beforehand and specify the following in the registration form.

- CodaLab team account name

- Github team repository URL

- Name of the contact person

- Email address of the contact person

- Affiliation of the contact person

- Number of team members

- Number of student team members

Please place an Apache License (Version 2.0) file (PDF) with an appropriate author name.

Registration Period = Hackathon Period

You can register after trying out your code, recruiting team members, and/or communicating with the organizers and/or other participants, on CodaLab.

Hackathon Product to be Evaluated

After closing, the organizer will collect the latest version before timestamp GMT 24h00 from each team as their submission for evaluation.

Scores on CodaLab

During the hackathon, participants will be able to upload and try out their code on the CodaLab platform. The evaluation will be made with the final score at closing.

Readme file

Please explain your modification to the sample code (architecture) in less than 1,000 words of English text (figures are welcome) in the README.md of the team repository you have specified at the registration.

Biological plausibility will be evaluated. Please justify the biological plausibility of your alterations by referring to neuroscientific literature for each modified module.

Source code

The source code on the GitHub repository at the deadline will be evaluated. The environment part (to be specified here) should not be altered.

Evaluation

Evaluation will be made with the last scores on CodaLab and your justification on the biological plausibility in the README taken from the deadline snapshots on the GitHub repositories.

Prizes and cash (max. 100,000JPY) will be awarded to teams with outstanding results.

Performance

The submitted code is scored with the task environment. The following criteria are used in the scoring:

- Figure shape identification

- Figure color identification

- Invariant object identification for the reduction and rotation of figures

- Positional relation identification between objects

- Delayed matching

- Choice of feature sets to be identified: shape, color, or positional relation

- The use of active vision

Please refer here for the scoring details.

Scores will be used to evaluate the generality of agents as well as performance for each task.

Biological Plausibility

Biological Plausibility will be mainly judged from the README describing alterations to the sample architecture. Please make your code readable, as the consistency between the README and code will be checked.

WBAI generally uses the GPS criteria for evaluation, so we recommend you to look into it. §3.3 and §4.2 of a paper from WBAI can be of help as well.

Schedule

- Hackathon period: 2021-05-01〜2021-10-31

- Orientation (with ‘Hands-on’)

- Announce of the result : 2021-11-30 (tentative)

- Awarding : TBD

Sponsors Wanted

We solicit sponsors who can help the participants with awards.

Please contact us from here.

Q&A and Team Building

We will use a Slack workspace (to join, email us to hackathon2021 [at] wba-initiative.org) and the forum on CodaLab as a community for this hackathon.

If you have any questions, please ask them there.

Also, please use the Slack workspace or CodaLab’s team management features to help create your team.

Organizations

Co-hosted by the Whole Brain Architecture Initiative

Co-hosted by Cerenaut

Supported by:

- RIKEN Center for Biosystems Dynamics Research

- Couger Inc.

- The Kakenhi project “Brain information dynamics underlying multi-area interconnectivity and parallel processing”

- SIG-AGI of the Japanese Society for Artificial Intelligence

- Grant-in-Aid for Scientific Research on Innovative Areas “Comparison and Fusion of Artificial Intelligence and Brain Science”

- Japanese Neural Network Society

References

Detailed information for the hackathon is found here.

The Architecture of Working Memory

While the mechanism of working memory has not been completely elucidated, the following might be said:

- Since working memory is involved in perceptual representation, sensory areas (neocortices other than the frontal area) are involved.

- The content of working memory (perceptual content of the past) and the present perceptual content must be distinguished within the cortex.

- Since the working memory is involved in the executive function by definition, the frontal lobe, which is supposed to be involved in the executive function, is involved.

- Thus, working memory involves a network between the frontal lobe and the sensory cortices.

- The executive function (frontal lobe) controls the retention and termination of working memory by interacting with the perceptual areas.

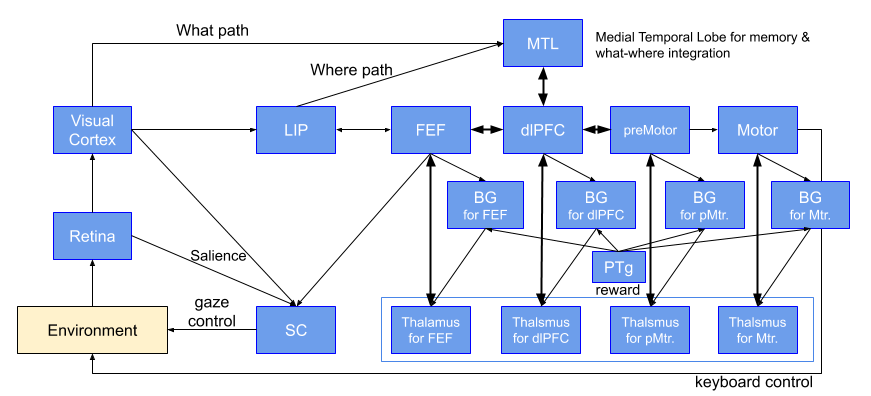

The sample architecture is based on the following architecture.

Fig. 1: whole brain reference architecture for the 5th WBA hackathon

SC:superior colliculus, LIP: lateral parietal, FEF: frontal eye field, BG: basal ganglia, PTg: pedunculopontine nucleus

Related Event: Working Memory Modelathon

Hackathon Task Details

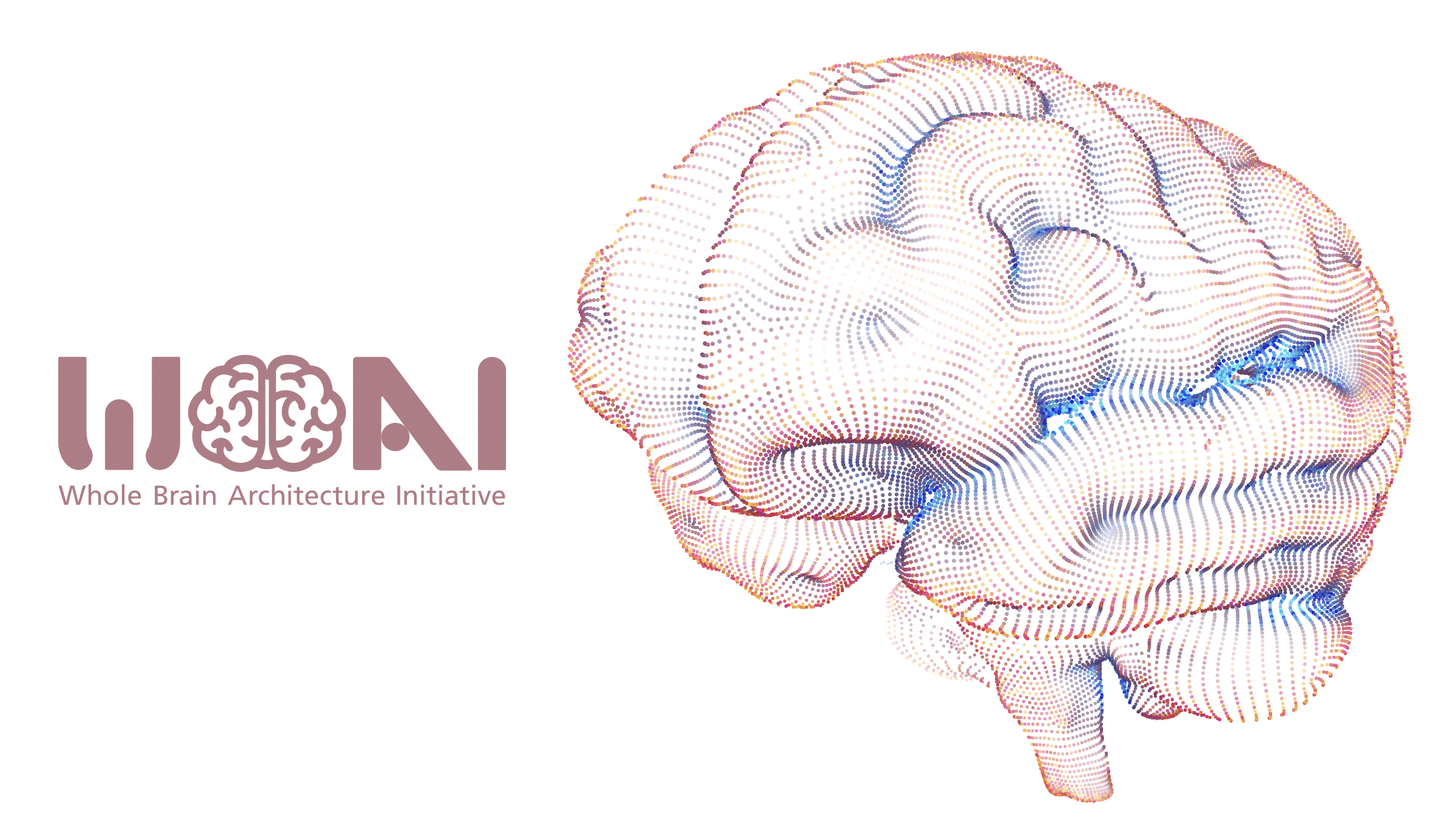

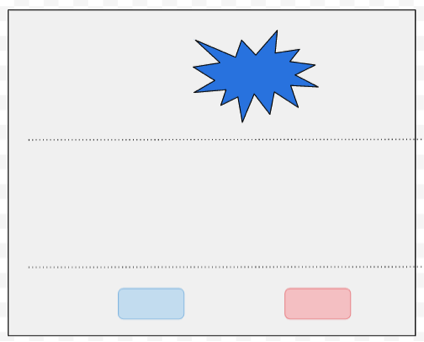

Test Screen

The screen to be presented to the agent consists of three sections (Figure 2).

Main tests

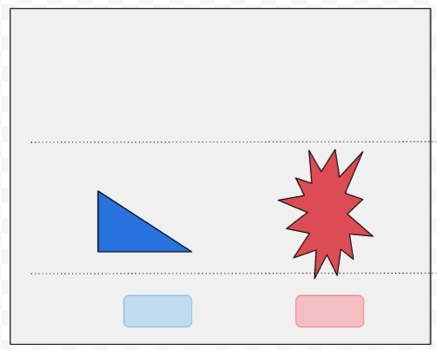

In the delayed sample matching task, the sample figure is presented without target figures at the beginning.

Then the sample figure is occluded, and the target figure is displayed after a period of time (up to several seconds). While the target figure is being displayed, the agent chooses a button in the response section. (Fig.4)

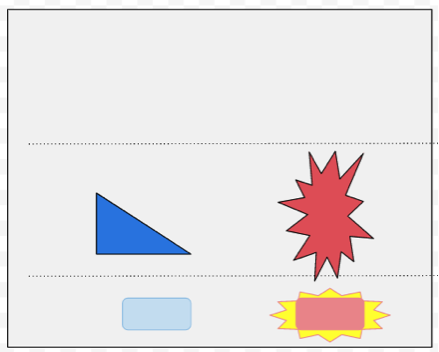

After a certain amount of time (up to a few seconds), the button corresponding to the correct target will flash (Fig. 5). At this point, the targets are still visible.

If the agent has responded correctly, it is rewarded. The correct answer is to choose the button below the ‘same’ figure as the sample figure (in the example above, the shape of the correct figure is the same as the sample, while the color and orientation differ).

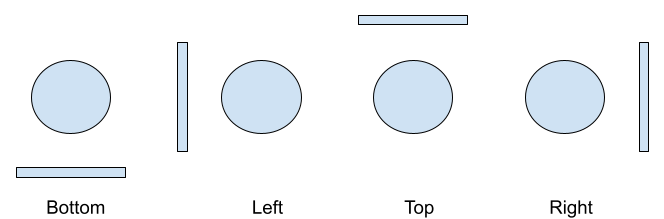

Either the shape, color, or positional relation is used to judge “sameness.” As shown in Fig.6, the positional relation is identified by the position of a bar relative to the main figure.

For a non-delayed sample matching task, the sample figure is presented during the selection of a target, where WM is still required as the agent has to retain information during gaze shifting.

Exemplar sessions (few-shot imitative rule learning)

The agent is shown one or more trials before main tests to learn which feature set (shape, color, or positional relation) to use for matching between the sample and target.

In a trial, the correct button in the response section flashes.

After trials, the entire screen flashes twice to enter the main test.

Pre-training

Participants can train their agents by designing pre-training sessions such as follows.

- Only one shape is displayed in the target section and selecting the button below leads to a reward and button flashing.

- Non-delayed sample matching task with no exemplar session:

The reward is given upon choosing the figure in the target section unambiguously most similar to the sample.

The Scoring Details

Each task has ten runs to use the average score.

| Task | Score |

| Non-delayed shape | 60 |

| Non-delayed color | 20 |

| Non-delayed bar position | 20 |

| Delayed shape | 120 |

| Delayed color | 40 |

| Delayed bar position | 40 |

| Total | 300 |

Japanese

Japanese